Project Team member: Youngwoong Cho, Danny Hong, Rena Kim, Ahzin Nam

Introduction

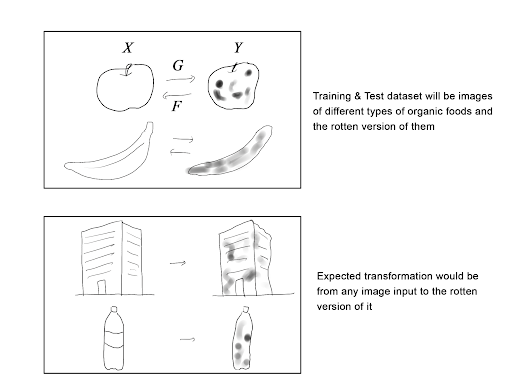

Our project began with a question, “what if inorganic things ‘decay’, like organic things?”

The importance of sustainable design is increasing. Through deep convolutional neural network, we imagined the world where everything is organic, and thus perishes.

A deep neural network was trained to predict the “decayed” appearance of an arbitrary input image. A dataset that consists of several hundred images of fresh/rotten apples, oranges, and bananas were used to train the neural network. pix2pix, CycleGAN, and DiscoGAN were considered as our neural network model, and CycleGAN was found to perform the best.

Decay, in Biology

The word decay nearly instinctively gives a negative impression, because it is usually associated with death. After an organic body goes through death, it biodegrades, or less formally, decays. The decomposers, such as fungi or bacteria, break down the organic body, changing it into an inorganic matter and blending it back in with the earth.

The physical and chemical properties of the dead organism change as it decays. As a result, the appearance becomes drastically different after an organic matter decays. The colors might change from vivid to dull; dark moles might appear; cavities with varying sizes might be created as well.

Decay and sustainability

However, humankind nowadays are rather more concerned of materials that do not decay — for example, plastics. According to Plastic Soup Foundation, plastic is non-biodegradable.

Plastic does not decompose. This means that all plastic that has ever been produced and has ended up in the environment is still present there in one form or another.

This is the reason why sustainable design is gaining popularity lately. Man-made products, after some time, must consider the environmental impact. Biodegradable plastics are one of such attempts.

Decay, and Neural network

Through deep convolutional neural network, we imagined the world where everything is organic, and thus perishes. We trained a cycleGAN model, a type of Generative Adversarial Network (GAN), that predicts the “decayed” appearance of any arbitrary input images — a tennis ball, a rubber duck. etc.

Process

Problem definition

The goal of the project is to design and build a neural network model that is capable of translating the image input $I \in \mathbb{R}^{3 \times H \times W}$ to an image output $O \in \mathbb{R}^{3 \times H \times W}$, where $H$ and $W$ are the height and the width of the image, respectively.

Dataset

The dataset taken from “Fruits fresh and rotten for classification” consists of train and test sets of fresh and rotten images of apple, banana, and orange. Take a look at Table 1 (a) and Table 1 (b) for the number of images for each class.

| Fruit type | Fresh/Rotten | Number of images |

|---|---|---|

| Apple | Fresh | 1693 |

| Rotten | 2342 | |

| Banana | Fresh | 1581 |

| Rotten | 2224 | |

| Orange | Fresh | 1466 |

| Rotten | 1595 |

| Fruit type | Fresh/Rotten | Number of images |

|---|---|---|

| Apple | Fresh | 395 |

| Rotten | 601 | |

| Banana | Fresh | 381 |

| Rotten | 530 | |

| Orange | Fresh | 388 |

| Rotten | 403 |

Model selection

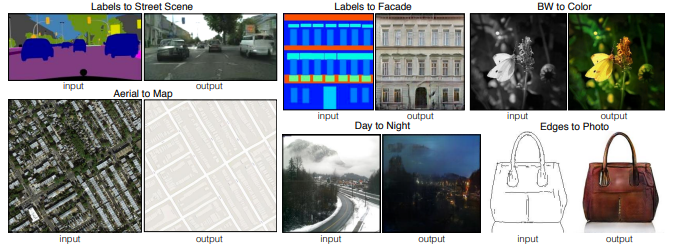

There are various neural network architecture that can carry out the task of image-to-image translation. We have narrowed down the candidates into three: pix2pix, DIicoGAN, and CycleGAN.

Pix2pix

Our first consideration was pix2pix.

However, it was not successful, since pix2pix required a set of paired dataset. It was almost impossible to prepare several hundreds of fresh-rotten pair of images.

DiscoGAN

Our next consideration was DiscoGAN. It was chosen since it not only could take in unpaired dataset but also was capable of performing geometry change. Since an apple, an orange, or a banana goes through a morphological change when rotten, DiscoGAN seemed to be a good choice.

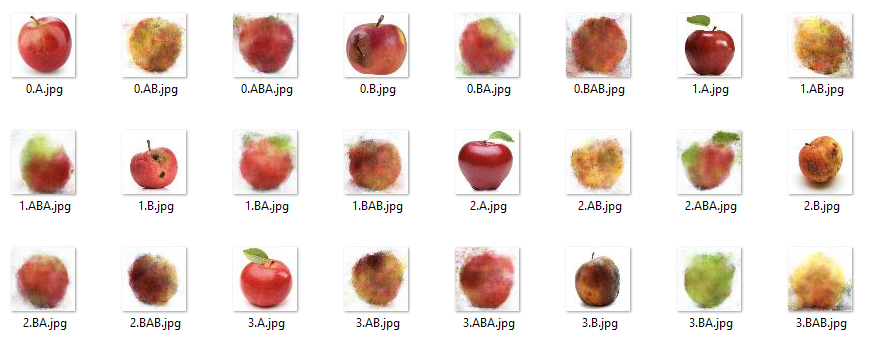

Following figure shows the resulting images after 50 epochs of training with apple2rotten dataset, which consists of 184 images of fresh apple and 271 images of rotten apple.

0.A.jpg is the original image of an apple, 0.AB.jpg is the image that is mapped to the domain of rotten apple, and 0.ABA.jpg is the reconstructed image, which is mapped back to the original domain. As it can be seen, the translated image(0.AB.jpg) not only shows the change in colors but also in its contours.

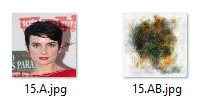

However, the transformation was not performing well at all for the images that are not apples. Take a look at following transformations.

When the model attempts to convert a non-apple image into an apple image, it tries to force to translate the image into an apple; thus, drastic failure.

CycleGAN

Our next consideration was CycleGAN because it could preserve the geometric characteristics of the input image while being capable of handling the unpaired dataset.

Following figures show the resulting images after 200 epochs of training with banana2rotten dataset, which consists of 342 images of fresh banana and 484 images of rotten banana.

It seems working! However, since our dataset mostly consists of banana, the model performed well only for the yellow images. Therefore, we decided to train the model using all images of the fruits - apples, oranges, and bananas altogether, so that our model can be ready for more diverse colors.

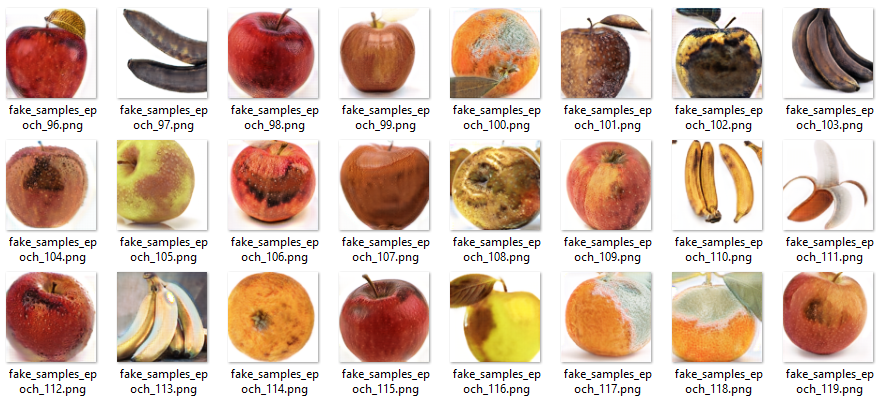

Following image shows some of the resulting images generated by the model while being trained.

It can be seen that the model is trained to translate the images of red, yellow, orange, and green.

Result

Following images are generated by our model that is trained to predict the decay of the input image.

The model is trained with 505 images of fresh fruits, and 690 images of rotten fruits. It was trained for 200 epochs.

Real-time inference from a webcam input

After the training is done, our Decay model is deployed on a machine with a webcam to perform the image translation in real time.

The code of the project can be found from the following Github link.

Leave a comment